Receive our bi-monthly newsletter featuring curated EVS updates, and insights into the latest advancements and innovations in the broadcast industry.

An insider's look at EVS's innovation strategy in the age of AI

- 26 November 2025

Q&A with Olivier Barnich, VP Innovation and Architecture at EVS

Innovation at EVS has always been guided by a single mission: helping creators tell better stories. In this Q&A, Olivier Barnich, VP Innovation and Architecture, shares insights into EVS’s innovation strategy and explains how the company’s structured model keeps its exploration of cutting-edge technologies like AI firmly grounded in real-world value.

How has EVS's approach to innovation evolved over the years?

Innovation has always been part of who we are. From our early days inventing the tapeless workflow, a breakthrough that revolutionized live content management, to developing today’s AI-powered tools, our mission has remained the same: helping our customers produce better, faster, and more creatively.

Over time, we've evolved our approach to emphasize speed and relevance. Using a “fail fast” approach, we quickly move from idea to proof of concept, gather customer feedback, and only advance when there’s clear, demonstrated value. This keeps us agile while still encouraging bold experimentation.

At the same time, we maintain a long-term vision. Some innovations take years to mature, and we’re willing to invest early when we see strategic potential. The Zoom feature for LSM-VIA, which lets operators instantly zoom into live replays, is a great example; it reflects years of background research and development, yet we were able to adapt and release it rapidly when the market signaled a clear need for it. That balance between foresight and agility defines our innovation rhythm.

Internally, we’ve built an environment where creativity can thrive. Regular hackathons give teams room to explore new ideas, and our annual R&D conference brings together hundreds of engineers from around the world to collaborate and spark fresh thinking.

Crucially, we ensure innovation stays grounded in real production needs. At our latest R&D conference, more than 300 team members stepped into roles like camera operators, directors, replay operators, and journalists, experiencing the pressure and precision of live production firsthand. That immersion sharpened everyone’s understanding of where our technology can create the greatest impact. You can watch the highlights of this incredible event below.

What’s your process for identifying and prioritizing the innovations worth investing in?

Innovation, for us, means solving real problems for real users. As a customer-intimate company, we see our role as helping broadcasters and production teams achieve their goals, bringing in new approaches only when they create clear, meaningful value.

To keep that focus, we rely on a short and continuous feedback loop with our users. We test early technologies and prototypes directly in real production environments, which lets us validate their value, refine them quickly, and invest where the market truly demands it.

We also know that customers operate on different timelines: some need immediate solutions, while others are exploring longer-term possibilities. Understanding these differences helps us adapt our innovation approach. That is exactly what the EVS Innovation team is here for.

Ultimately, we look for the right moments where a new technology can truly meet a market need, and we focus our efforts where they will have real impact.

Guided by our vision, we’ve set ambitious goals and are actively working behind closed doors on key developments. These efforts will lead to major innovations in the years to come: not just incremental improvements, but meaningful advances for the industry.

AI now powers many of EVS’s solutions, from replay systems to media management. What tangible value does this bring to your customers?

Every decision ties back to our mission of creating “return on emotion”, giving storytellers the tools to amplify the moments that move audiences. Our AI developments are designed not as standalone technologies, but as tools that bring real, practical value to everyday workflows.

Take XtraMotion, for example. It uses generative AI to turn standard replays into super slow-motion clips from any camera angle, eliminate blur to turn unusable footage into crisp, clear images, and add a cinematic touch that highlights a player’s reaction after a key moment.

AI also enhances the LSM-VIA Zoom feature mentioned earlier. With automatic object tracking, it speeds up operations and instantly provides operators with fresh perspectives. In addition, automated 9/16 cropping streamlines the creation of platform-ready clips for social media.

For operators, it means richer content and faster workflows. For viewers, it means a more immersive and emotional experience on screen.

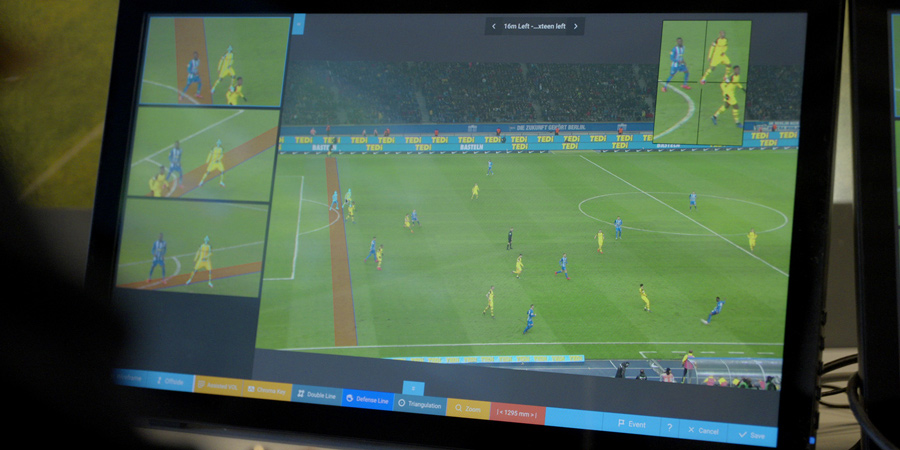

With our Xeebra VAR system, we focused on how AI could make officiating faster and more precise. By automating offside line analysis with an AI-powered virtual line certified by FIFA, we’ve turned what was once a manual, time-consuming process into a near-instant response.

Other Xeebra AI-driven capabilities include skeleton tracking and deblurring, providing referees with clearer, more readable footage that supports fair, confident decisions under pressure.

AI-powered offside line integrated into EVS’s Xeebra VAR system

In VIA MAP — our media asset platform for news, sports, and more — AI streamlines large-scale storytelling. Features like speech-to-text transcription, face recognition, scene detection, and natural language search make it faster to index, navigate, and retrieve the right content; freeing teams to focus on creative storytelling.

And because these capabilities are integrated directly into our systems rather than relying on external APIs, customers benefit from smoother workflows, stronger data governance, and quicker access to the moments that matter.

And now, with our new T-Motion media production robotics offering, born from the acquisitions of Telemetrics and XD motion, we’re combining AI with advanced mechanical precision to enhance efficiency, as well as image quality and stability.

What makes AI development for live production uniquely challenging?

Live production leaves no room for mistakes. That’s why accuracy, latency, and reliability sit at the core of every AI tool we build.

Unlike in academia, where occasional wrong outputs are acceptable, one incorrect output in a live broadcast is one too many. Operators don’t get a second chance once content goes live, so we design our systems to deliver consistently correct results.

Latency is equally critical. Our AI must process live video streams on the fly, without interrupting the production flow. XtraMotion, for example, delivers enhanced replays in under three seconds from the moment the operator presses the button. This exceptional turnaround is essential for high-paced sports and reflects the deep expertise we have developed in optimizing AI algorithms for speed, often making significant efforts to reduce computation times to meet live production needs.

Xeebra illustrates this balance of precision and speed as well. Referees rely on highly accurate information, but they also need it immediately, with an entire stadium waiting. Our solutions are built to provide both: fast, reliable output that supports confident decision-making.

We also consider deployment flexibility. Some use cases require on-premises processing for speed and control, while others can benefit from the scalability and flexibility of the cloud. We design our solutions to support both, depending on what best serves the customer and the production context.

And of course, the operator experience is a crucial element. AI should enhance capabilities without adding complexity - fitting seamlessly into workflows and making jobs easier under pressure.

With one button press on the LSM-VIA remote, XtraMotion instantly transforms replays with AI-driven super slow-motion, deblur, or cinematic effects.

What role do academic research and student projects play in accelerating innovation at EVS?

They play an important role in our innovation process, especially when it comes to exploring and testing new AI techniques. Academic environments are often where the most cutting-edge ideas take shape, with researchers pushing the boundaries of what's possible.

By collaborating with universities and sponsoring research, we gain access to early-stage innovations that we can later turn into real-world solutions for live production.

A great example is the academic chair “Computer Vision and Data Analysis for Sports Understanding”, which we recently launched with the University of Liège. This initiative focuses on advancing AI techniques for the automatic interpretation of sports images and video, an area with huge potential to enhance storytelling, analysis, and automation in live broadcasting.

Student projects are also a great way to experiment with new approaches and prototype ideas quickly. They allow us to test concepts that are not yet mature enough for product development but worth exploring. At the same time, these projects help us identify and attract promising new talents who are passionate about live production and AI.

Ultimately, these partnerships let us stay ahead of the curve. They complement our internal efforts by bringing in fresh thinking, preparing the ground for the next generation of AI-powered tools, and helping us build the team that will deliver them.

Anne-Sophie Nyssen, Rector of the University of Liège, and Serge Van Herck, CEO of EVS, sign the convention establishing a new academic chair on AI applied to sports.

As AI capabilities evolve, where do you see the greatest potential to redefine live production workflows over the next 3-5 years?

Agentic AI is emerging as one of the technologies that will most profoundly transform the broadcast industry over the next three years. Through our participation in the IBC Accelerator project “AI Assistance Agents for Live Production”, we were able to explore this technology in depth, and the experience has been genuinely eye-opening.

Because agentic AI is natively integrated with large language models, it opens the door to true natural-language interfaces between humans and machines, allowing operators to interact with complex production tools as easily as issuing everyday instructions.

As part of the project, we developed an AI agent capable of responding to requests such as “improve the slow-motion quality on this clip” or “reframe it for publication on social media,” and then execute the full workflow autonomously. The integration of this agent into a multi-vendor agentic ecosystem proved to be surprisingly easy and remarkably powerful, demonstrating how flexible and collaborative these systems can be.

This, in turn, challenges the way we think about how workflows are defined and executed, opening the possibility for far more dynamic, adaptive, and intelligent production pipelines.

Looking further ahead, real-time photorealistic 3D rendering of live events is another area with exciting potential. This technology enables the creation of a digital twin — a fully reconstructed virtual environment that mirrors reality in real time.

Techniques like Neural Radiance Fields (NeRFs) and Gaussian splatting are already showing how AI can rebuild highly realistic 3D scenes from multiple camera feeds, and we expect them to mature quickly into live-ready solutions.

Within such environments, virtual cameras can be placed anywhere, enabling replays from perspectives never physically captured. This could transform how directors and producers tell the story of the action while also opening up entirely new formats for audiences, such as VR and AR experiences for viewers who want to feel as if they’re part of the action. It’s a powerful example of how AI, paired with our core production expertise, can elevate both the workflow and the overall viewing experience.

Olivier Barnich is Head of Innovation and Architecture at EVS. He earned an engineering degree in electronics (2004) and a PhD in computer vision (2010) from the University of Liège, where his research advanced video background subtraction. He joined EVS in 2010 and played a key role in building its Innovation team. Anticipating the rise of AI in 2016, he expanded the team with data scientists to explore its potential for transforming live broadcast production. Today, he leads efforts to shape and deliver new products and features from initial concept through full implementation.