#4

Intelligent digital zoom with super resolution algorithms

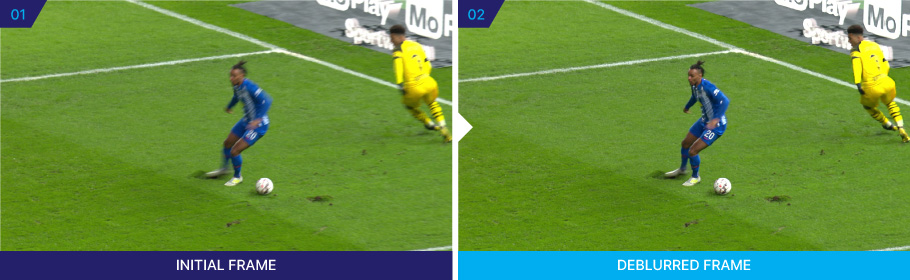

As mentioned previously, before production begins, a camera plan is established to determine the positioning of cameras and camera operators for capturing the event. The director then assigns specific objectives for each camera operator, such as focusing on particular players or capturing specific types of shots. During the event, the director maintains frequent communication with camera operators and updates their instructions to ensure that no crucial moments are missed. Nevertheless, when an unexpected event occurs, the team’s reaction may not always be quick enough to properly frame the area of interest.

Fortunately, recent advances in computer vision and image processing can be advantageously combined to accurately frame any event, with the sole requirement that the event is visible within a wide angle shot.

By combining saliency detection [18], object detection [19] and tracking [20], it is indeed possible to semi-automatically define a virtual camera trajectory that selects an area of interest in the wide-angle footage. The area of interest is then extracted from the wide-angle view and brought back to the native resolution of the production using a super resolution algorithm.

Super resolution, the process of generating high-resolution images from low-resolution inputs, has long posed a challenge in the field of computer vision. However, recent advancements in deep learning techniques have revolutionized the state-of-the-art in video super resolution, offering a wide range of solutions to enhance image quality in broadcast productions.

Approaches, such as convolutional neural networks (CNNs) [21], transformer-based methods, generative adversarial networks (GANs) [22], and diffusion-based models [23], are commonly employed to learn the mapping from low-resolution to high-resolution images.

Despite being an ill-conditioned problem, as multiple high-resolution images could correspond to a single low-resolution image, these approaches have demonstrated considerable success in producing remarkable results.

By allowing to create high-quality close-up video streams from wide-angle views, the combination of super resolution with saliency detection, object detection and tracking presents an exciting opportunity to empower operators to tell even more engaging stories with minimal additional effort or equipment costs.

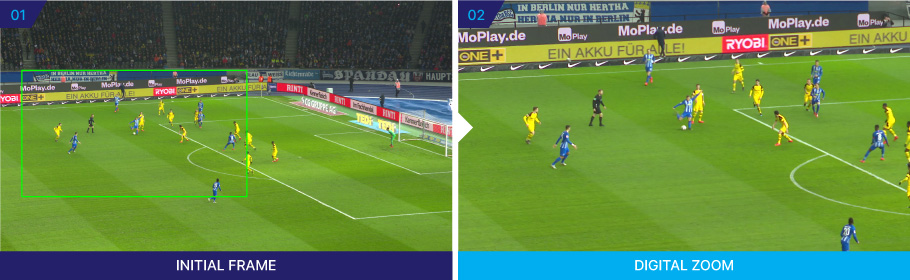

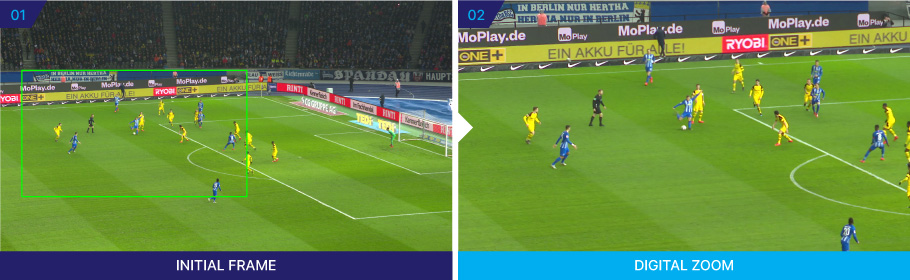

Figure 5 illustrates this application, exemplifying its potential impact.

Figure 5 - Leveraging object (person) detection and tracking to cut out an area of interest out of a wide-angle view (left-hand side) and bringing it back to the native resolution of the production using a super resolution algorithm (right-hand side).